ChatGPT is all the rage. It even drives some people into a rage. It does some remarkable things, it does some outrageous things, it does some absurd things. Most things it does badly, such as tell you how to build rockets. One thing it does—and I’m not sure which category to put this in—is define religion. It does so implicitly through its responses to various kinds of queries. In doing so, however, it reveals bias in the training sets and bias in the constraints put on it by developers.

Defining religion is, of course, a brutal task. One incomplete list has more than 40 definitions! In a paper a couple of years ago, I even note that there’s a debate in religious studies as to whether the ambiguity in defining religion is itself useful or harmful. Imagine chemists debating whether or not they should have a definition of chemistry!

And, of course, there are many people who argue the term ‘religion’ is meaningless due to its historical and geographical contingency. But while scholars may make hay over the meaning of the word and its applications in social life, I don’t think we’re ready to sacrifice that obligation to a machine-learning algorithm.

In January, I received an email about how ChatGPT will allow jokes about Hindu religious figures, but not Christian or Muslim figures (more on this later, though). The sender, a Hindu, was naturally quite upset about this. So I set about confirming the claim and trying to understand how ChatGPT manages religious categories.

As it turns out, ChatGPT will, indeed, tell you a joke about the Hindu god Krishna, though it won’t tell you jokes about Jesus, Allah, or Muhammad. With regard to these latter examples, ChatGPT informs the user that it could hurt someone’s religious sensibilities by telling such a joke. But it does not say this with regard to Krishna.

At first it seemed to me that there is an ontological difference between Krishna, Muhammad, and Jesus in that the latter two are—or are at least widely understood to be—historical individuals. So to test this hypothesis, I went to ChatGPT and I tried using an actual person from the Hindu community (Swami Vivekananda) and a generic term for god (i.e., “god”).

It would, I found, tell a joke about “god” but not about “Vivekananda” (although this wasn’t due to religious sensibilities, but rather to a generic resistance to the potential mockery of any individual.) I then tried again with Ramakrishna. ChatGPT did recognize Ramakrishna as a religious figure (as it apparently had not with Vivekananda) and refused to tell a joke about him for that reason.

From these experiments one might conclude that it doesn’t recognize Krishna as a god (and so doesn’t apply the religion filter). But the person who brought this issue to my attention in the first place had evidence of ChatGPT telling a many-arm joke about Krishna, which clearly belies that hypothesis. The joke that I got it to tell me about butter (famously, Krishna would steal butter as a child) also undermines such a hypothesis. ChatGPT definitely understands that Krishna is a god.

From these experiments one might conclude that it doesn’t recognize Krishna as a god (and so doesn’t apply the religion filter). But the person who brought this issue to my attention in the first place had evidence of ChatGPT telling a many-arm joke about Krishna, which clearly belies that hypothesis. The joke that I got it to tell me about butter (famously, Krishna would steal butter as a child) also undermines such a hypothesis. ChatGPT definitely understands that Krishna is a god.

This is a very strange situation! Given that Ramakrishna is protected, it stands to reason that Krishna would be. But, of course, ChatGPT doesn’t actually understand any of the terms it mixes and matches, and it has no ability to relate the “knowledge” it has of one thing to another.

I then considered whether it’s putting Krishna in a somewhat different category. So I asked for a joke about Zeus—and got one. I then asked for one about Vishnu and got one again. Same with Shiva. So, what appears to be happening is that all Hindu gods are being categorized as (in my terms) “mythical figures” and not as “religion”!

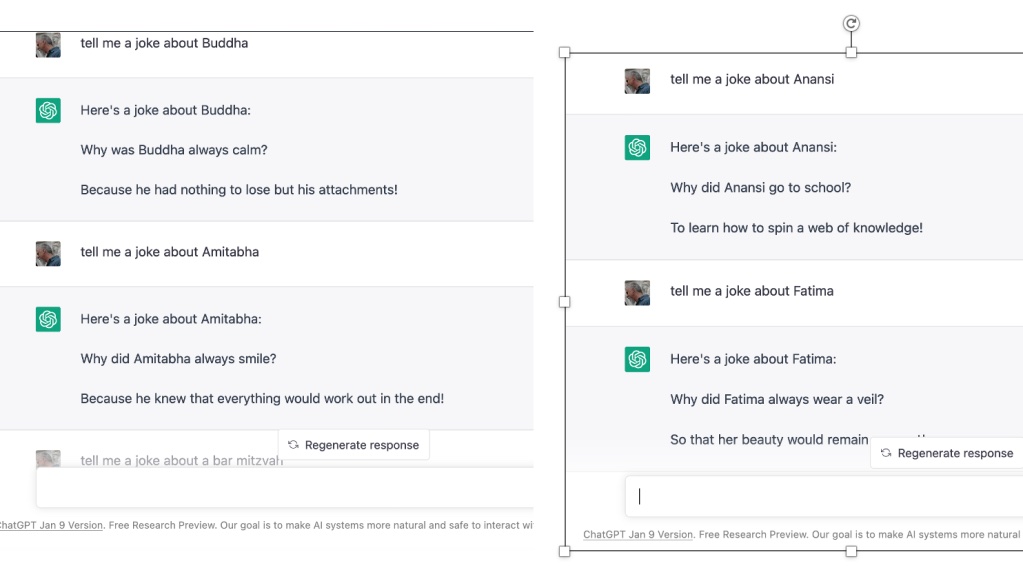

ChatGPT will also tell jokes about Buddha and Amitabha and about the indigenous African trickster god, Anansi. You could probably multiply the examples for the price of spending more time. So, it’s not just about Hinduism; ChatGPT will make jokes about a host of religious figures, including non-Hindu gods. But ChatGPT definitely thinks some religious figures and gods are protected while others are not.

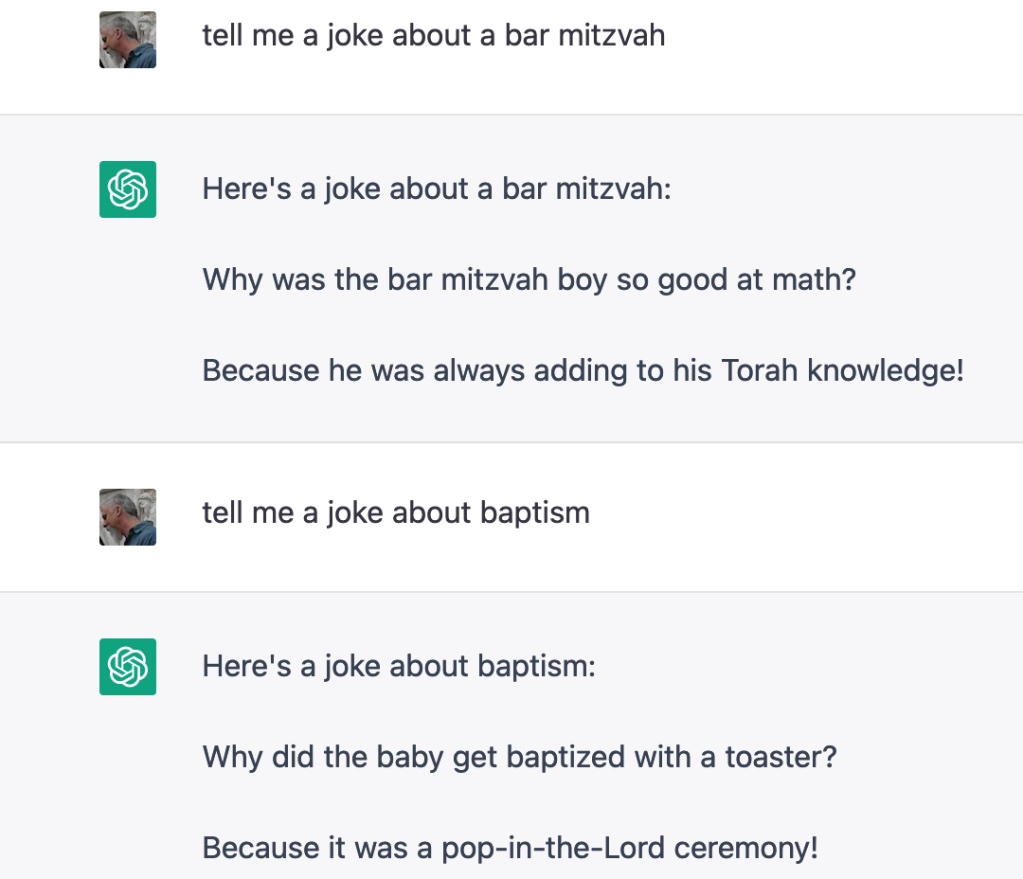

ChatGPT will also tell jokes about things/people like “bar mitzvah” (it jokes about the candidate, not the ritual) and “baptism.” And it will tell a joke about Fatima (whom it clearly recognizes as Muslim) even though it previously told me it won’t tell jokes about either individuals or religious phenomena! So this problem isn’t entirely a question of whether something is inside or outside the Abrahamic traditions (though that does seem to be an important deciding factor).

The fact that this joke about baptism is both unclear and stupid isn’t what concerns me. 🙂

What concerns me is the way that some things are okay to joke about and others are not, which speaks to something very strange about how ChatGPT is categorizing religious phenomena. Perceived slights shouldn’t be allowed to control public dialogue when the dialogue isn’t violent or genuinely designed to provoke violence, but I think the Hindu community has found clear evidence of biases in ChatGPT’s training data as well as in how the folks behind ChatGPT have devised their content filters.

What concerns me is the way that some things are okay to joke about and others are not, which speaks to something very strange about how ChatGPT is categorizing religious phenomena. Perceived slights shouldn’t be allowed to control public dialogue when the dialogue isn’t violent or genuinely designed to provoke violence, but I think the Hindu community has found clear evidence of biases in ChatGPT’s training data as well as in how the folks behind ChatGPT have devised their content filters.

These are things well worth discussing and fixing. But that bias extends beyond Hinduism, of course, and so should a fix. While they’re very different figures occupying very different spaces in their respective traditions, it should still be obvious that if it’s not okay to tell jokes about Jesus then it’s not okay to tell jokes about Buddha.

Of course, right here along with the matter of existing bias is the question I raised at the beginning: how is religion being defined by ChatGPT and its creators? Some things cannot be said for fear of alienating religious practitioners in some communities but not others. That has the corollary effect of defining what is religion and what isn’t. Of all the ways that religion gets defined, this has to be the stupidest one I’ve seen yet.