It’s primary season in America, that special time when we come together as a nation and renew the democratic process by relentlessly quantifying popular opinion. According to the polls, for example, evangelicals love Donald Trump. Recent polls also indicate, somehow, that they don’t like him at all. 29 percent of Americans believe President Obama to be an adherent of Islam, a religion that nearly one in three Iowa Republicans thinks should be illegal. (Perhaps because those Iowans took notice of this poll, the results of which seem to indicate that half of American Muslims support the imposition of sharia law, and nearly one-fifth justify violence as a means to this end.)

Inventing American Religion: Polls, Surveys, and the Tenuous Quest for a Nation’s Faith

Robert Wuthnow

Oxford University Press

(October 1, 2015)

Polls can produce inconsistent, and sometimes baffling, representations of American belief. They also range in accuracy, from sophisticated sociological surveys to thinly veiled propaganda (the sharia law poll being an example of the latter). Furthermore, as Robert Wuthnow documents in Inventing American Religion: Polls, Surveys, and the Tenuous Quest for a Nation’s Faith, polling can itself influence the way that we understand its object of study. Wuthnow’s new book documents how the polling industry has influenced—and distorted—the way that religion is understood in America, by religious practitioners and scholars alike.

Robert Wuthnow is professor and chair of the Department of Sociology at Princeton University. The Cubit recently reached out to him by phone to discuss Jimmy Carter, the “Nones,” and guidelines for interpreting poll data about religion.

This interview has been edited for clarity and length.

Andrew Aghapour: How did polls become a significant tool for understanding American religion, and what made them so attractive?

Robert Wuthnow: Broad commercial polling began in the 1930s, when George Gallup, Sr. paid for polls by getting a couple hundred newspapers to pay for his columns. Religion was something that was of personal interest to him, but the pieces about religion would simply occur from time to time, at Thanksgiving, Christmas, or Easter. The rest of the polls were about political topics, including the Depression, what was going on in Washington, and so forth.

There really wasn’t much interest [in religion] at the time, even though Gallup tried to make the poll results seem interesting, and the newspapers were carrying them. Even in the 1940s when he started asking questions about belief in God, there wasn’t much response—the limited response there was came mostly from church leaders, who were skeptical. What does it mean to say that 95% of the public believe in God? That doesn’t tell us much of anything, and so it took a long time for Gallup and his competitors to sell the idea that polling about anything, including religion, was of interest or importance.

It wasn’t until the 1950s, during the Cold War period—with the idea that America is a religious country and the Soviet Union is a godless Communist country—that people started gravitating to the idea polls are telling us something interesting. This was also rooted in the notion, increasingly prominent in the 1950s, that science was the big wave of the future. Since Gallup and other pollsters claimed that their polls were scientific, that also attracted attention, at least in the media if not in the general public.

Then, in 1976, there occurred what you call “the most notable instance to date in which polling played a major role in defining a significant feature of the religious landscape.” Could you tell us about the year of the evangelical?

The year of the evangelical was 1976, when Jimmy Carter achieved election to the White House and the role of polling was to greatly expand the number of Americans who were evangelicals.

How is that possible that a poll could do that? George Gallup, Jr. was now in charge of religious polling at the Gallup Organization. George Jr. was a born-again Episcopalian himself, and had started doing more polls about Evangelicals. When Jimmy Carter came along, journalists were asking themselves, “What does it mean to be a born-again Evangelical?” George Gallup, Jr. had the answer, because he’d asked questions in his polls about whether people considered themselves born again, had ever had a born-again experience, what they believed about the Bible, whether they considered themselves Evangelicals, and so forth.

Gallup said there might be 50 million American who are evangelicals, and journalists ran with that. It was a much higher number than had been assumed before, [which were based on counting members of those denominations within] the National Association of Evangelicals.

In addition to changing the numbers, polling also changed political perception, [by implying] that evangelicals were a voting bloc. That made sense to journalists because Catholics were a voting bloc for John F. Kennedy in 1960—so surely evangelicals must have been a voting bloc for Jimmy Carter. That wasn’t the case at all. Some of the leading, most powerful, influential evangelical leaders were actually for Gerald Ford. There was a lot of diversity among evangelicals themselves that got masked by being lumped together in the polls as if they were all the same thing.

Did these polls utilize categories that counted more people as evangelicals, relative to other measurements?

They did. The way they got 50 million was basically inventing a new question that said something to the effect of, “Have you ever had a born-again religious experience, or something similar to a religious awakening?” And that was pretty much it. Evangelical leaders themselves didn’t believe the Gallup numbers were right, and [they thought that] the best way to combat those Gallup numbers was to ask Gallup to do another survey.

And so in 1978, Christianity Today, a leading periodical for evangelicals, paid Gallup to do a big survey and in addition to just asking the born-again question, they asked questions about belief in the Bible, belief in Jesus, and intent on converting others.

As a result, Gallup revised its estimate downward, to less than 30 million. Would it be fair to say that the “year of the evangelical”—the notion of a politically focused evangelical upsurge— was more an artifact of bad polling than an actual phenomenon in the history of American religion?

It was both. Other, better studies—by the American National Election Studies, for instance, and the General Social Survey at the University of Chicago—were also showing that if you chose people based on their Protestant denomination and figured out the ones who were the most biblically conservative, those evangelicals were more politically active than they had been in the past.

It had formerly been the case that evangelicals fit the notion of the fundamentalist who withdraws from society, whereas in 1976 (and subsequently), evangelicals were more politically active whether they voted for Carter or not. The fact that Carter was an evangelical gave evangelicals the sense that, “Oh, maybe we do count, and maybe we should get out and vote.”

There was some of that but as far as journalistic reports were concerned, the year of the evangelical was mostly driven by the way the polls were asking the questions.

Today’s most controversial polling trend is the rise of the “Nones”—those who indicate religious non-affiliation in surveys by selecting “none of the above.” Nones seem to have jumped from a stable 6-8% of the population during the 1970s and 1980s to, in recent years, 16-20%. Do you think this reflects a shift toward disbelief in America?

The rise of the Nones—meaning people who say they don’t have a particular religious affiliation or actually identify themselves as being non-religious—does appear to be a trend that is picked up in the very best academic surveys as well as in polls of lesser quality.

So let’s say something is going on—and the reason most of us who do sociology of religion from an academic standpoint think something is going on is not because of the more popularized polls, but because the better-quality academic surveys also show the same thing and indeed have been showing the same thing for a while. Then the question is, “What exactly is going on?” And there appear to be several conclusions.

One is that a number of the people who are categorized as Nones still claim to believe in God. Many of them occasionally attend religious services; hardly any of them identify clearly as atheist. So they may be, for some reason, identifying themselves as non-religious even though they still believe. We also know from some of the surveys that they identify themselves as “spiritual but not religious,” meaning that somehow they’re interested in God and spirituality, and existential questions of life and death, but are turned off by organized religion.

Second, the political climate over the last ten to fifteen years appears to be part of the story. The religious right has become so publicly identified with conservative politics, that people who were formerly willing to say, “I’m religious” in [denominational] terms are now saying, “You know what, I just don’t want to have anything to do with it.” That’s a hard story for many of us to believe, but there does seem to be pretty good data supporting it, as part of the story at least.

Then, number three, is that the studies that actually ask a person the same question a year or two later are finding that individuals change their minds a lot. That seems to occur especially with people who are identified as Nones. One paper identifies at least half of the Nones as “Liminals,” people who are trying to decide, on the cusp of making up their mind. You ask them one year, and they say “I’m non-religious,” you ask them the same question the next year and they’ll say they’re religious and they’ll tell you what kind of religion they are. Or [vice-versa].

Now this may be characteristic of the times in which we live—people are uncertain about who they are, about what they think religiously—but it also challenges how we think about polls. Polls have always assumed that whatever a person says is reliable, and that they really mean it and stick with it. So if you find any changes over time, that’s significant because polls are measuring stable opinions—if those change, that’s important.

But the fact of the matter seems to be that polls are [measuring] answers to questions that are not very thoughtful, because polls are hastily done by telephone rather than in person. If you’re a respondent in a poll, you’re being asked anywhere from four to five complex questions every minute. They’re throwing questions at you so rapidly that you often have no idea whether you really gave the right answer that you really believe or not, and then they only have an 8% response rate anyway, so you have no idea who the other 92% are who weren’t in the poll.

Such small response rates must also distort measurements of minority groups.

The polling industry tries to take a national sample and therefore, for the most part, the results represent the majority much more so than the minority. One of the distortions that happen is what I call “white-norming.” Let’s say, in a national poll of a thousand respondents, upwards of eight hundred and fifty of those are going to be white respondents and somewhere around 12% or 13% are going to be African Americans. Pollsters, first of all, get results that mostly pertain to the white majority.

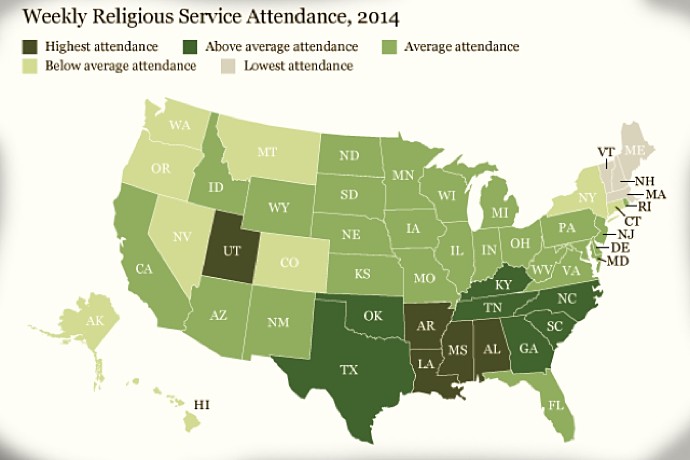

Secondly, they often ask questions that reflect white majority trends and, thirdly, since the response rates are so bad, they may weight the data in a way that reflects the white majority better than the African-American minority. One of the consequences is that some of the polls, not all of them, suggest that there are really no differences between white Americans and African Americans in terms of things like church attendance, religiosity, prayer. And yet when you look at the occasional major study of African Americans, with a large number of respondents and some white Americans for comparison purposes, the evidence suggests that African Americans are much more religious on average than white Americans in terms of how many hours in a given week they may spend at the church, or how important it is to them, or how often they see church friends, and so forth.

That’s an example of white-norming giving us a kind of false picture of, in this case, African Americans. And some of the same things are in danger of happening in studies that look at other religious minority groups.

What guidelines should we keep in mind when we see poll data about religion in the news?

Well, first and foremost, remember that the poll numbers being reported may be in the general ballpark correct, but probably can’t be interpreted very precisely in terms of the small trends that are being reported. For instance, if somebody reports that American religion is declining because church attendance is down a percentage point this year from last year, don’t pay much attention to that.

Secondly, pay attention, skeptically, to the way the headline describes the data. So once again, let’s imagine that the church attendance rate is lower this year than last year, let’s say it’s two or three percent lower all of a sudden. Does the headline say that religion is “on the skids” or that “America is losing its faith”? That’s more the journalist’s or the editor’s fault than the pollster’s fault. Polling usually doesn’t produce news. It usually just produces possibly interesting information—it isn’t news in the sense that something really happened, and so editors and reporters have to think of some way to make it seem like news.

Third, always remember that the polls have a very low response rate. Most of the polls, whether about religion or politics, have an eight percent response rate now. It means that ninety-two percent of the people who should have been contacted for it to be a representative poll are not there, and we don’t know what they would have said, and so we’re only making guesses. The guesses could be wrong, and often are wrong.

Finally, remember that where political polls have occasional checkpoints—elections actually happen, and pollsters can [subsequently] adjust weighting factors so that the data are closer next time—with religion questions, they don’t have anything like that. So if we hear that x percentage of the public is not really Catholic even though they say they are, or x percentage of the public like the Pope or they don’t like the Pope, we can only ask ourselves, “Well, does that make sense with what we know from other sources, and from talking with our neighbors?”