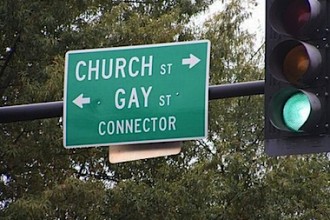

Does Religion Condemn Homosexuality?

…hink about our bodies. What of Christianity, the dominant religion in the United States? A large majority of Americans identify as Christian, and even non-Christians may find themselves holding “Christian” ideas about sex and proper and improper uses of the body. Additionally, whether you are Christian or not, in the United States you will be subject to laws that are directly influenced by Christianity’s historical ambivalence about, and even fea…

Read More